Research Series: Article 4

March 17, 2020

By Özgür Güler, PhD

We experience Artificial Intelligence (AI) around us in every shape and form whether it’s digital home assistants, targeted advertisements through social media, or movie recommendations on streaming services. While one might think the technologies are contemporary, the term Artificial Intelligence was coined back in 1955 by John McCarthy at a workshop on developing ideas for thinking machines at the Dartmouth College. Many consider AI as the fourth Industrial Revolution.

AI has since gone through ups and downs, seeing major breakthroughs but also quite a few setbacks. Due to the limited capabilities of algorithms in the past, the industry experienced two significant lull periods, referred to as “AI winters”, leading to decreased funding and research interest. It was difficult for the AI industry to relapse after decades of setbacks. While technology has improved and we have advanced the algorithmic capabilities, the industry now faces concerns around safety and transparency, especially in safety-critical sectors such as healthcare and autonomous driving. If these issues are not addressed, it could lead to yet another AI winter.

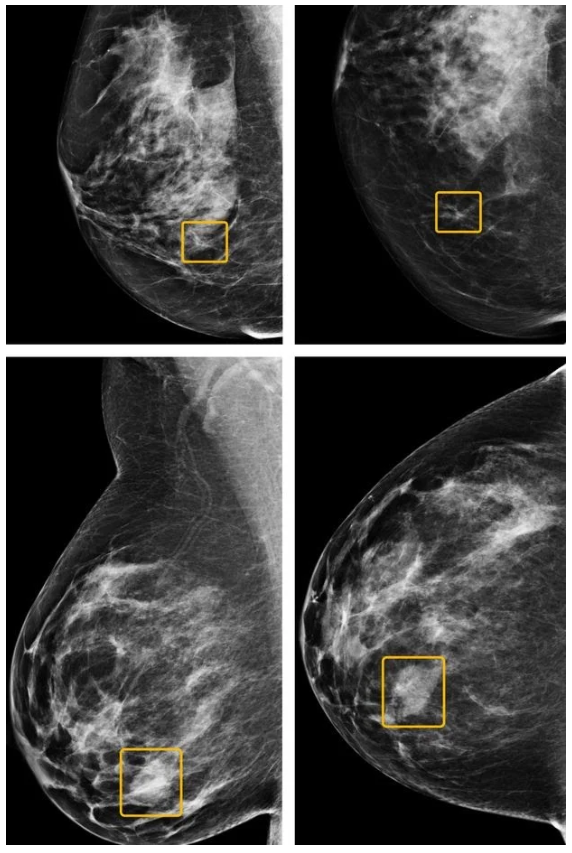

AI has great potential in applications across the board. However, adoption remains difficult and falls short of its true potential in many high-risk applications. Due to lack of transparency and the complexity of the underlying models, many consider AI applications to behave like black boxes in which the logic remains unknown to the user. In industries such as healthcare, caregivers may be reluctant to use AI based technologies because there is an expectation to understand the logic behind the outcome computed by the AI model. For instance, Google’s health research unit said it has developed an artificial-intelligence system that can match or outperform radiologists at detecting breast cancer, according to new research published in Nature.1 Figure 1 shows a cancer case missed by six U.S. radiologists but caught by the AI system. Bottom images show a case identified by the radiologists but missed by the AI system.

“While the AI system caught cancers that the radiologists missed, the radiologists in both the U.K. and the U.S. caught cancers that the AI system missed. Sometimes, all six U.S. readers caught a cancer that slipped past the AI, and vice versa, said Mozziyar Etemadi, a research assistant professor in anesthesiology and biomedical engineering at Northwestern University and a co-author of the paper.”1

Many Fortune 500 companies took on challenges with advancing AI. For example, in the last decade IBM invested around $1 billion for its AI initiative called IBM Watson and acquired $4 billion worth of health-data companies. While there have been significant investments and development for AI applications in the healthcare industry, only a couple of dozen medical devices using AI were approved by the FDA in 2019.

What is explainable AI?

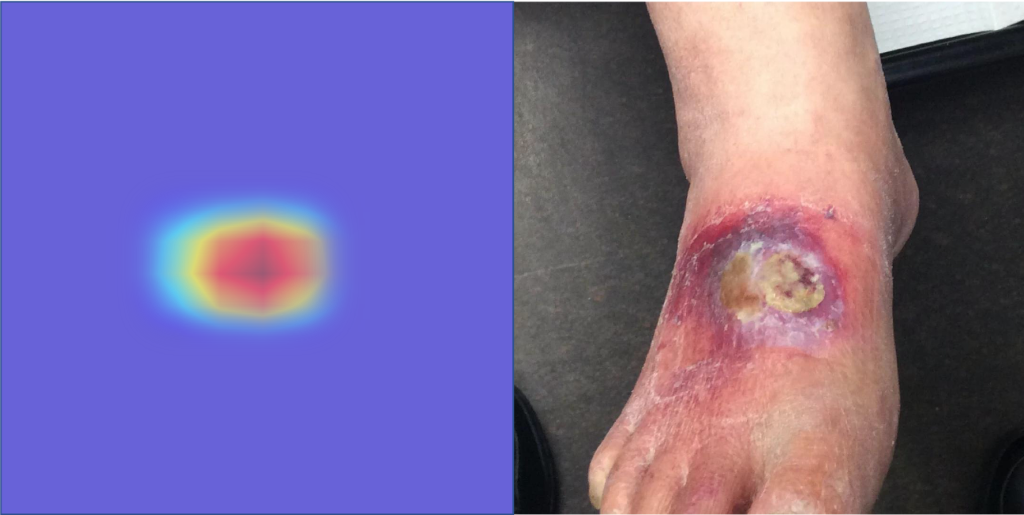

Explainable AI (XAI) is the next best thing in AI for safety-critical applications. It combines the important digital opportunities with transparency. More transparency and guided inference facilitate trust in AI systems, ideally yielding higher adoption rates in sectors like healthcare. XAI act like ‘glass boxes’ and provide data on intermediate steps of the inference process instead of providing only the end output prediction. For example, if AI is being applied to x-rays, the explainable AI system would output a prediction and take it one step further by showing where it looked during the decision process by overlaying a heat map on top of an x-ray image. As shown in Figure 2, the wound type prediction model provides a heatmap which shows the location on the image which the AI model based its decision. Red indicates the most important location to determine wound type while blue indicates the least important.

Big players in AI picked up on the need for more transparent AI applications in high-risk applications. IBM Watson offers its “OpenScale” tool to bring transparency into the AI models trained online through the Watson platform. Google’s tool is called “Explainable AI”, which is still in beta but offers insights into first-generation machine learning models such as decision trees. H2O offers an open source, free-to-use, machine learning framework. H2O’s “Driverless AI”, helps machine learning practitioners with model parameter tuning, which is a necessary but very time-consuming task. In addition to adjusting model parameters, H20 has now expanded its Driverless AI capabilities with features to address basic insights into XAI.

How does XAI work?

Companies offering machine learning as a service are utilizing XAI in a variety of ways to help improve transparency and provide more glass box-like AI solutions. Below are some examples of XAI implementations in various phases of the machine learning pipeline.

Addressing Bias in Data:

When developing a machine learning pipeline, the collection and preparation of data is a key step. If bias or gaps exist in the data, the accuracy of the AI model could be compromised. “Garbage in, garbage out” is a simple idiom used to guide the AI model building. For example, if a data set feeding an AI-based financial credit approval model is made up of 70% male and 30% female data, the output of the model could favor male applicants. As a result, males could be approved more than females simply because there is more male data. This type of model discrimination can result in poor prediction accuracy and high litigation risk. To address the model discrimination, some XAI solutions include applying statistical tests, ANOVA analysis, or sample power computations. It is always good practice to ask the question “Does my model discriminate?”, and if so, address it.

Gaining insight based on input:

In the model-building phase, one way to get insight into a trained model is by testing the model’s behavior through variation of the input parameters. By changing the input parameters and monitoring output, one can determine how much a parameter affects the final result. For example, for a credit risk predictor, a change in employment status from employed to unemployed should greatly affect the credit risk metric. However, if the applicants age is changed from 40 to 41, a significant change should not occur. Some XAI vendors provide capabilities that support this approach as a feature, i.e. a list is provided for factors that change an outcome to another class by minimally changing the input parameters. This is important because data can be noisy and noise shouldn’t affect the outcome to the extent that the prediction changes. XAI can help in these cases to debug your model and answer the question, “Why did my model make this mistake?”.

Gaining insight based on top influential parameters:

Similar to the variation of input parameters approach, one can find out top factors contributing to the output by assigning an importance metric to all the parameters, which e.g. flip the prediction to another prediction class. This functions as a sanity check on the top factors and gives insight into the model. If this list aligns with an expert opinion in this domain, then this acts as a sanity check that the trained model makes sense. For instance, if the top three factors for house price prediction are color of the house, carpet age, and existence of a fireplace, then an expert would immediately know that this model didn’t grasp the underlying pattern of house price prediction. Whereas, if location, house size, and age are within the top factors then you would have more trust in this model. Human-AI cooperation is essential to adoption of AI in mission-critical applications. Insights gained by utilizing XAI will develop an understanding of and trust in the model’s decisions. Similarly, one can do the reverse and detect the least important factors which affect or support the outcome. Doing so can help improve on model accuracy.

Last but not least, model documentation is important for proper software development processes and to satisfy legal requirements. To this day, the FDA faces difficulty with clearing medical devices based on AI because they act like black boxes. Mainstreaming XAI in the future will help with regulatory compliance. Although XAI offerings are in their infancy, it shows where the opportunities lie to drive adoption and save AI industry from another AI winter.

Dr. Özgür Güler is Chief Technology Officer and co-founder of eKare. Dr. Güler received his PhD from the Medical University Innsbruck in Austria with focus on image‐guided diagnosis and therapy and MS in Computer Science with a focus on image‐guided surgery. He was a researcher at the Sheikh Zayed Institute (SZI) for Pediatric Surgical Innovation in Washington DC, where he developed the segmentation and classification algorithms that laid the groundwork of the eKare inSight system.

___________________________________________________

1. McKinney, S.M., Sieniek, M., Godbole, V. et al. International evaluation of an AI system for breast cancer screening. Nature 577, 89–94 (2020). https://doi.org/10.1038/s41586-019-1799-6